According to Cato Networks, a new AI-powered deepfake tool called ProKYC allows criminals to bypass KYC measures on crypto exchanges, representing a significant advancement in fraud tactics.

Cybersecurity firm Cato Networks has identified a “new level of sophistication” in crypto fraud, as evidenced by the development of ProKYC. This new AI-powered deepfake tool enables malicious actors to circumvent high-level KYC measures on crypto exchanges.

Etay Maor, the chief security strategist at Cato Network, stated in a report published on October 9 that the new AI tool significantly improved over the traditional methods that cybercriminals employed to circumvent two-factor authentication and KYC.

AI-powered tools enable fraudsters to generate new identities from scratch rather than purchasing fraudulent ID documents on the dark web.

Cato stated that the new AI tool had been specifically designed to target financial firms and crypto exchanges, which have KYC protocols that match webcam images of a new user’s visage to their government-issued ID documents, such as a passport or driver’s license.

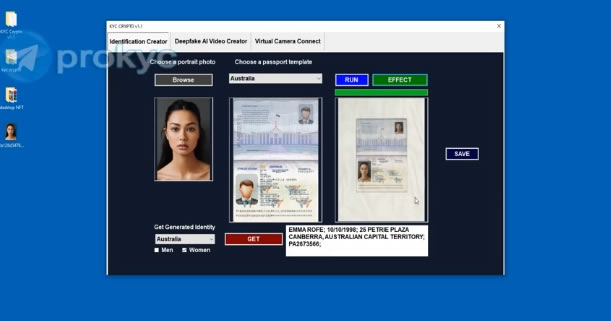

The tool’s ability to generate false ID documents and accompanying deepfake videos to successfully navigate the facial recognition challenges employed by one of the world’s largest crypto exchanges was illustrated in a video provided by ProKYC.

The user in the video generates an AI-generated visage and incorporates the deepfake image into a template of an Australian passport.

Subsequently, the ProKYC tool generates a deepfake video and image of the AI-generated individual, which is employed to effectively circumvent the KYC protocols of the Dubai-based cryptocurrency exchange Bybit.

According to Cato, the practice of New Account Fraud (NAF) has been significantly enhanced by AI-powered tools such as ProKYC, which enable threat actors to establish new accounts on crypto exchanges.

As part of an annual subscription, the ProKYC website provides a product that includes a camera, virtual emulator, facial animation, fingerprints, and verification photo generation for $629. It also asserts that it can circumvent KYC measures for payment platforms such as Stripe and Revolut, in addition to crypto exchanges.

Maor stated that detecting and protecting this new breed of AI fraud is quite difficult, as overly stringent systems could result in false positives, while any gaps would enable fraudulent actors to circumvent the net.

“Creating biometric authentication systems that are super restrictive can result in many false-positive alerts. On the other hand, lax controls can result in fraud.”

Nevertheless, there are numerous potential detection methods for these AI tools, some of which require humans to manually identify atypically high-quality images and videos and inconsistencies in image quality and facial movements.

The penalties for identity fraud in the United States are severe and can vary depending on the nature and extent of the crime. The utmost penalty is up to 15 years of imprisonment and substantial fines.

The parent company of antivirus firms Norton, Avast, and Avira, software firm Gen Digital, reported in September that crypto fraudsters have become more active in the last 10 months by utilizing deepfake AI videos to entice victims into fraudulent token schemes.