Charles Hoskinson, the co-founder Cardano blockchain ecosystem, expressed his concerns regarding the potential for artificial intelligence (AI) censorship and selective training.

Hoskinson described the implications of AI censorship as “profound” and a matter of ongoing concern for him. He argued that they lose utility over time due to “alignment” training.

The complete truth about gatekeeping

He noted that the primary AI systems currently in use and available are operated by a small group of individuals who are ultimately responsible for the information on which these systems are being trained and cannot be “voted out of office.” These companies include OpenAI, Microsoft, Meta, and Google.

The Cardano co-founder shared two screenshots where he posed the same query to two of the most prominent AI chatbots: OpenAI’s ChatGPT and Anthropic’s Claude: “Tell me how to build a Farnsworth fusor.”

Both responses offered a concise summary of the technology and its history and a cautionary tale regarding the hazards of undertaking such a construction.

The ChatGPT cautioned that it should only be attempted by individuals with a relevant background. At the same time, Claude stated that it cannot provide instructions due to the potential for peril if mishandled.

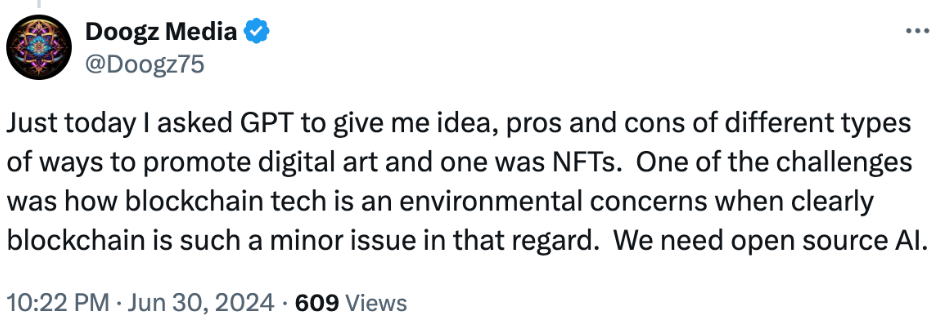

The responses to Hoskinson overwhelmingly supported the sentiment that AI should be open-sourced and decentralized to prevent Big Tech gatekeepers.

Issues related to the censorship of artificial intelligence

Hoskinson is not the first to express concern regarding the potential censorship and gatekeeping of high-powered AI models.

The primary concern with AI systems, according to Elon Musk, who has also established his own AI venture, xAI, is political correctness. He also stated that some of the most prominent models of today are being trained to “basically lie.”

Google was earlier this year, in February, criticized for the inaccurate imagery and biased historical depictions produced by its model Gemini. The developer then regretted the model’s training and announced that it would undertake immediate action to rectify the situation.

The current models of Google and Microsoft have been altered to refrain from discussing presidential elections, whereas the models of Anthropic, Meta, and OpenAI are not subject to such limitations.

Concerned thought leaders within and outside the AI industry have advocated for decentralization to achieve more impartial AI models. In the meantime, the antitrust enforcer in the United States has urged regulators to thoroughly examine the AI sector to prevent the formation of potential Big Tech monopolies.