Tech companies developing new artificial intelligence (AI) tools were advised by the Indian government that such tools require government approval before being released to the public.

As per the advisory issued by the IT Ministry of India on March 1, this sanction must be obtained before the public debut of AI tools deemed “unreliable” or still in the trial phase.

Furthermore, such tools should be appropriately labeled to indicate the possibility that they may deliver inaccurate responses to inquiries. Moreover, the ministry stated:

“Availability to the users on Indian Internet must be done so with explicit permission of the Government of India.”

In light of the upcoming general elections this summer, the advisory further requested that platforms ensure their tools do not “endanger the integrity of the electoral process.”

One of India’s highest-ranking ministers recently criticized Google and its AI tool Gemini for providing “inaccurate” or biased responses, one of which claimed that certain individuals have labeled Indian Prime Minister Narendra Modi a “fascist.” This new advisory follows shortly after that.

Google stated that Gemini “may not always be reliable,” especially about timely social issues, and apologized for its deficiencies.

The deputy IT minister of India, Rajeev Chandrasekhar, stated on X: “Safety and trust are the legal obligations of [a platform].” “Sorry Unreliable” is not a legal exemption.”

The Indian government announced in November that it would implement new regulations to combat the spread of deepfakes generated by artificial intelligence before its forthcoming elections, a measure regulators in the United States have also adopted.

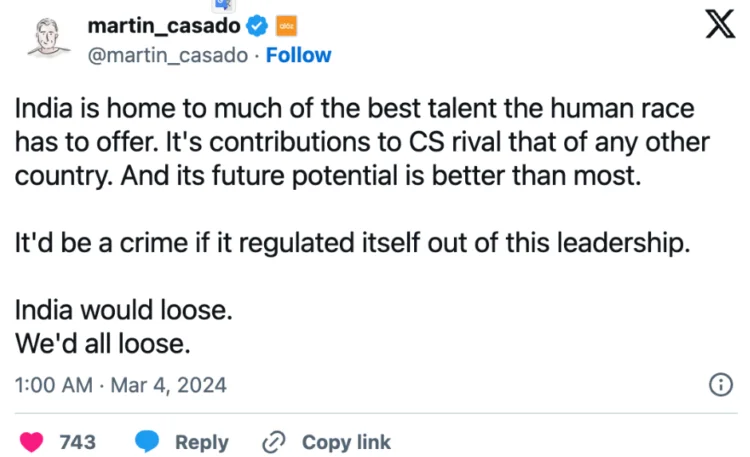

India is a leader in the technology sector, and it would be a “crime” for the country to “regulate itself out of this leadership,” according to the tech community, which reacted negatively to the government’s most recent AI advisory.

In response to this “noise and confusion,” Chandrasekhar stated in a follow-up post on X that platforms that “enable or directly output unlawful content” should face “legal consequences.” His further statement was:

“India believes in AI and is all in not just for talent but also as part of expanding our Digital & Innovation ecosystem. India’s ambitions in AI and ensuring Internet users get a safe and trusted internet are not binaries.”

Furthermore, he specified that the purpose of the advisory was to “advise those deploying lab-level or under-tested AI platforms onto the public internet” about their responsibilities and the repercussions that Indian law imposes, as well as the most effective means of protecting themselves and their users.

Microsoft and India AI startup Sarvam partnered on February 8 to integrate an Indic-voice large language model (LLM) into Azure AI to expand their user base in the Indian subcontinent.