US lawmakers have introduced the NO FAKES Act to combat AI misuse, targeting the spread of unauthorized deepfakes.

The capabilities of artificial intelligence are being increasingly abused as it continues to develop, with certain individuals utilizing the technology to defraud cryptocurrency users. US lawmakers have introduced a new measure to safeguard citizens from AI-generated deepfakes in response to this increasing threat.

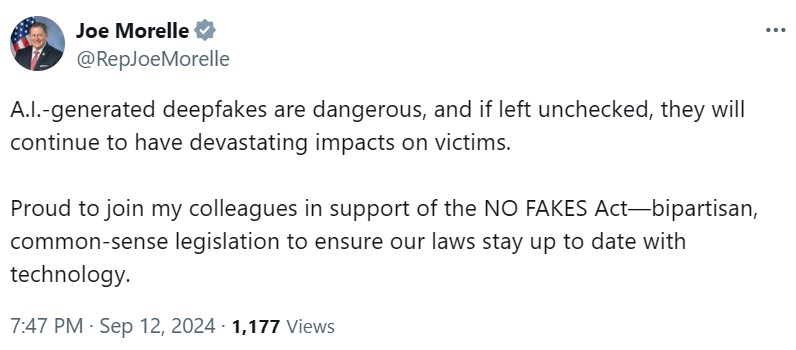

The Nurture Originals, Foster Art, and Keep Entertainment Safe (NO FAKES) Act was introduced by Representatives Madeleine Dean and María Elvira Salazar on September 12. The legislation is designed to safeguard Americans from AI exploitation and combat the proliferation of unauthorized AI-generated deepfakes.

The US lawmakers stated in a press release that the NO FAKES Act will enable individuals to take action against the malicious actors who create, post, or profit from unauthorized digital reproductions of them. Furthermore, it will shield media platforms from liability if they remove offensive content.

According to the announcement, the new law was also proposed to safeguard free speech and innovation. Nevertheless, not all individuals are persuaded that this will occur.

Private Censorship Recipe

Corynne McSherry, the legal director at the Electronic Frontier Foundation, a nonprofit organization concentrating on digital rights, thinks that the NO FAKES Act could serve as a “recipe for private censorship.”

McSherry stated in August that the measure may be advantageous for attorneys, but it would be a “nightmare” for the general public. The legal professional stated that the NO FAKES Act provides fewer protections for lawful speech than the Digital Millennium Copyright Act (DMCA), which safeguards copyrighted material.

The lawyer stated that the DMCA provides a straightforward counter-notice procedure that individuals can employ to have their work restored. Nevertheless, the attorney stated that NO FAKES mandates that individuals appear in court within 14 days to assert their rights. McSherry elaborated:

“The powerful have lawyers on retainer who can do that, but most creators, activists, and citizen journalists do not.”

The operations of AI deepfake crypto fraudsters are being expanded

Gen Digital, a software company, reported in the second quarter of 2024 that fraudsters have stolen at least $5 million in cryptocurrency using AI deepfakes. The security firm advised users to remain vigilant, as AI enhances schemes’ sophistication and persuasiveness.

At the same time, CertiK, a security company that operates on the Web3, anticipates that AI-powered assaults will expand beyond video and audio and may target wallets through facial recognition.

A spokesperson for CertiK informed Cointelegraph that wallets implementing this feature must assess their preparedness for AI attack vectors.