Vitalik Buterin, has expressed his approval of artificial intelligence’s potential to detect vulnerabilities in the Ethereum codebase.

Artificial intelligence (AI), according to Vitalik Buterin, co-founder of Ethereum, could be indispensable in resolving one of the platform’s “most significant technical risks”: bugs concealed deep within its code.

Buterin expressed enthusiasm in a February 18 post on X regarding using AI-powered auditing to detect and repair flawed code in the Ethereum network, labeling it the “most significant technical risk” to the network.

One application of AI that I am excited about is AI-assisted formal verification of code and bug finding.

Right now ethereum's biggest technical risk probably is bugs in code, and anything that could significantly change the game on that would be amazing.

— vitalik.eth (@VitalikButerin) February 19, 2024Buterin’s remarks coincide with the imminent implementation of Ethereum’s highly anticipated Dencun upgrade, which is planned to go live on March 13.

On January 17, Dencun was deployed to the Goerli testnet; however, for four hours, the network’s finalization was impeded by a Prsym error. Sustained enhancements to the Ethereum network are imperative for the blockchain’s long-term trajectory.

Nevertheless, there are dissenting opinions regarding the dependability of AI as a tool for identifying vulnerabilities in Ethereum-based code.

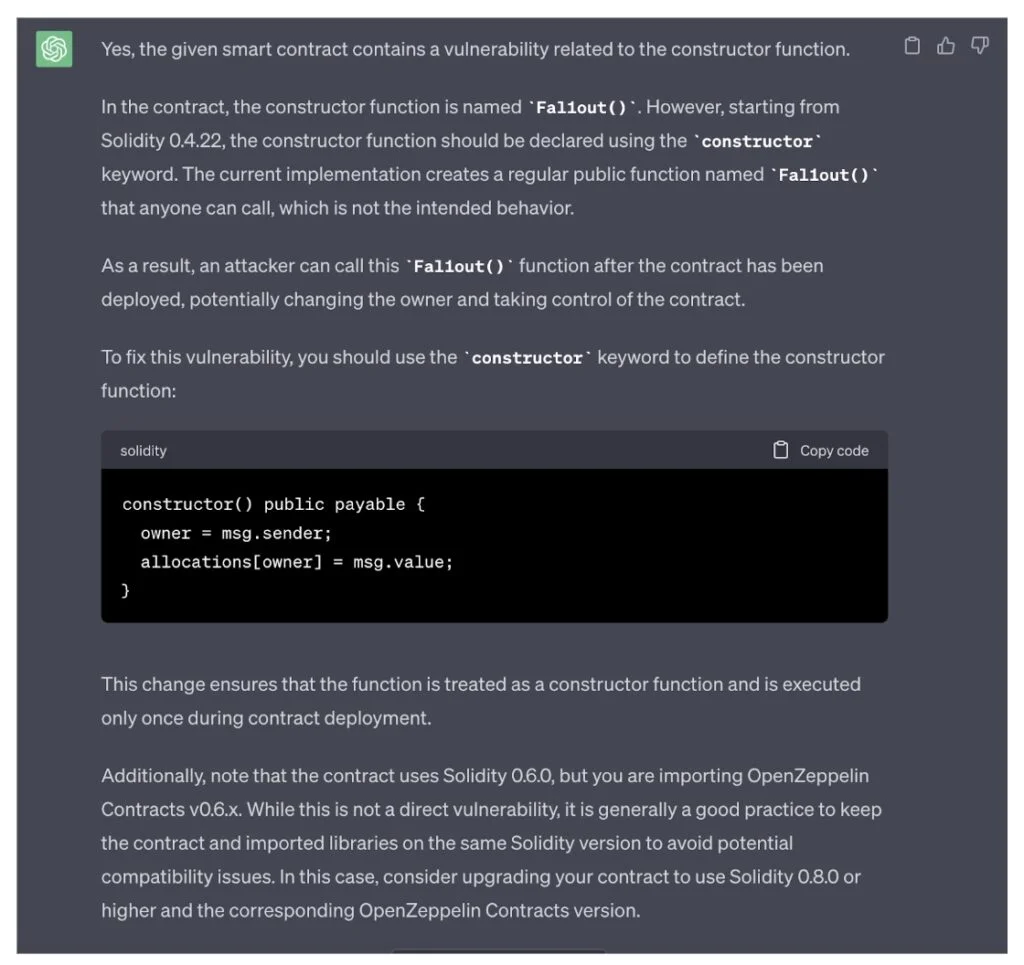

OpenZeppelin performed a sequence of experiments in July 2023 wherein it employed OpenAI’s GPT-4 to detect vulnerabilities in Solidity smart contracts written in Ethereum’s native programming language.

During these investigations, GPT-4 effectively detected vulnerabilities in 20 out of a total of 28 challenges.

When GPT-4 failed to detect defects, it was frequently prompted to rectify its errors promptly. Conversely, OpenZeppelin discovered on other occasions that the AI had implemented a previously nonexistent vulnerability.

Similarly, Kang Li, the chief security officer at CertiK, stated to Cointelegraph that using AI-powered coding tools (such as ChatGPT) frequently generates additional security concerns rather than resolving them.

In general, Li advises against using AI assistants except in the presence of seasoned programmers, as they may prove useful in expeditiously explicating the significance of a line of code to developers.

“I think ChatGPT is a great helpful tool for people doing code analysis and reverse engineering. It’s definitely a good assistant, and it’ll improve our efficiency tremendously.”

Although Buterin maintains a generally positive outlook on the future of artificial intelligence, he has previously cautioned developers to exercise prudence when integrating AI into blockchain technology, especially in conjunction with “high-risk” applications like oracles.

“Exercising caution is crucial: if an individual constructs a stablecoin or prediction market, for instance, relying on an AI oracle that is compromised, that substantial sum of money could vanish in an instant.”