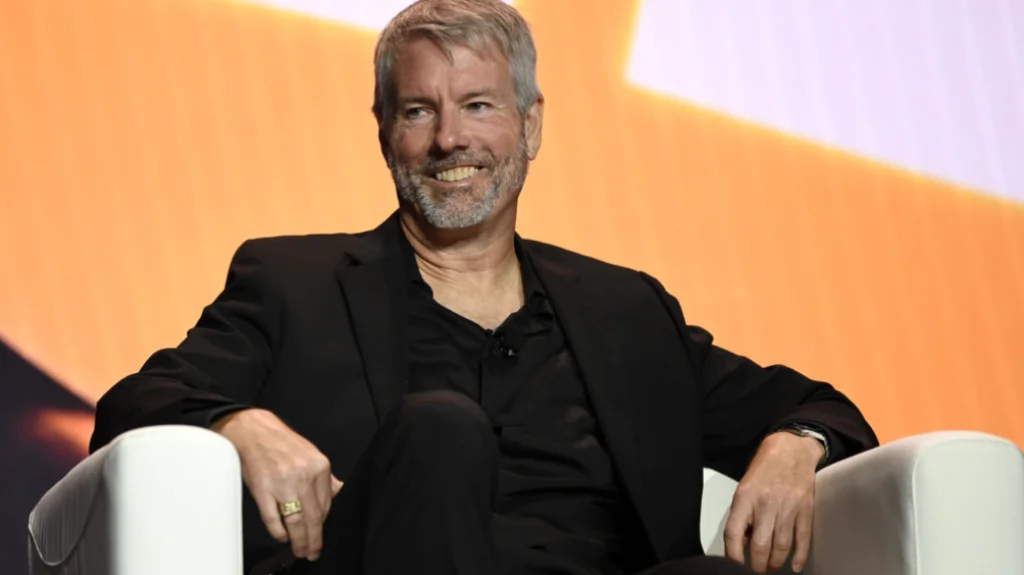

Daily, his team removes approximately eighty (80) artificial intelligence (AI)-generated deep fake videos featuring MicroStrategy executive chairman Michael Saylor, most promoting Bitcoin-related scams.

Saylor cautioned his 3.2 million followers on X (previously Twitter) on January 13 regarding the proliferation of profound fake videos discovered on YouTube, stating that “the con artists continue to release more.”

He reiterated, “There is no risk-free method to double your bitcoin, and @MicroStrategy does not award $BTC to individuals who scan a barcode.”

Several X users reported fraudulent AI-generated videos last week, in which Saylor allegedly offered to double users’ funds. To transmit Bitcoin to the address of the con artist, the videos instruct viewers to scan the QR code.

A comparable circumstance transpired in 2022 when many counterfeit Elon Musk videos surfaced on the streaming platform.

In January, a deepfake video featuring Solana co-founder Anatoly Yakovenko was circulating on social media and YouTube.

Head of strategy at the Solana Foundation, Austin Federa, stated in an interview with The Verge, “Recently, there has been a significant increase in deepfakes and other AI-generated content.”

As AI technology advances, cybersecurity experts warned in December 2023 that deep phony videos powered by AI will become more realistic.

Analyst of the CertiK blockchain Jesse Leclere told Cointelegraph that generative AI is a significant factor in the increasing sophistication of phishing.

Jerry Peng, a researcher at 0xScope, further stated that artificial intelligence could play a crucial role in creating increasingly lifelike deep fakes to deceive cryptocurrency users.

Legislators in the United States issued a warning on January 9 regarding the potential for generative AI tools to lower the technical barrier to entry for fraudulent individuals.

Rob Joyce, director of cybersecurity at the National Security Agency, argued that AI could assist law enforcement in pursuing illicit activity.